I have written a few times about problems with peer review and publishing.* My own experience subsequently led me to the problem of coercive self-citation, defined in one study as “a request from an editor to add more citations from the editor’s journal for reasons that were not based on content.” I asked readers to send me documentation of their experiences so we could air them out. This is the result.

Introduction

First let me mention a new editorial in the journal Research Policy about the practices editors use to inflate the Journal Impact Factors, a measure of citations that many people use to compare journal quality or prestige. One of those practices is coercive self-citation. The author of that editorial, Ben Martin, cites approvingly a statement signed by a group of management and organizational studies editors:

I will refrain from encouraging authors to cite my journal, or those of my colleagues, unless the papers suggested are pertinent to specific issues raised within the context of the review. In other words, it should never be a requirement to cite papers from a particular journal unless the work is directly relevant and germane to the scientific conversation of the paper itself. I acknowledge that any blanket request to cite a particular journal, as well as the suggestion of citations without a clear explanation of how the additions address a specific gap in the paper, is coercive and unethical.

So that’s the gist of the issue. However, it’s not that easy to define coercive self-citation. In fact, we’re not doing a very good job of policing journal ethics in general, basically relying on weak enforcement of informal community standards. I’m not an expert on norms, but it seems to me that when you have strong material interests — big corporations using journals to print money at will, people desperate for academic promotions and job security, etc. — and little public scrutiny, it’s hard to regulate unethical behavior informally through norms.

The clearest cases involve asking for self-citations (a) before final acceptance, for citations (b) within the last two years and (c) without substantive reason. But there is a lot short of that to object to as well. Martin suggests that, to answer whether a practice is ethical, we need to ask: “Would I, as editor, feel embarrassed if my activities came to light and would I therefore object if I was publicly named?” (Or, as my friend Matt Huffman used to say when the used-textbook buyers came around offering us cash for books we hadn’t paid for: how would it look in grainy hidden-camera footage?) I think that journal practices, which are generally very opaque, should be exposed to public view so that unethical or questionable practices can be held up to community standards.

Reports and responses

I received reports from about a dozen journals, but a few could not be verified or were too vague. These 10 were included under very broad criteria — I know that not everyone will agree that these practices are unethical, and I’m unsure where to draw the line myself. In each case below I asked the current editor if they would care to respond to the complaint, doing my best to give the editor enough information without exposing the identity of the informant.

Here in no particular order are the excerpts of correspondence from editors, with responses from the editors to me, if any. Some details, including dates, may have been changed to protect informants. I am grateful to the informants who wrote, and I urge anyone who knows, or thinks they know, who the informants are not to punish them for speaking up.

Journal of Social and Personal Relationships (2014-2015 period)

Congratulations on your manuscript “X” having been accepted for publication in Journal of Social and Personal Relationships. … your manuscript is now “in press” … The purpose of this message is to inform you of the production process and to clarify your role in the process …

IMPORTANT NOTICE:

As you update your manuscript:

1. CITATIONS – Remember to look for relevant and recent JSPR articles to cite. As you are probably aware, the ‘quality’ of a journal is increasingly defined by the “impact factor” reported in the Journal Citation Reports (from the Web of Science). The impact factor represents a ratio of the number of times that JSPR articles are cited divided by the number of JSPR articles published. Therefore, the 20XX ratings will focus (in part) on the number of times that JSPR articles published in 20XX and 20XX are cited during the 20XX publication year. So citing recent JSPR articles from 20XX and 20XX will improve our ranking on this particular ‘measure’ of quality (and, consequently, influence how others view the journal. Of course only cite those articles relevant to the point. You can find tables of contents for the past two years at…

Response from editor Geoff MacDonald:

Thanks for your email, and for bringing that to my attention. I agree that encouraging self-citation is inappropriate and I have just taken steps to make sure it won’t happen at JSPR again.

Sex Roles (2011-2013 period)

In addition to my own report, already posted, I received an identical report from another informant. The editor, Irene Frieze, wrote: “If possible, either in this section or later in the Introduction, note how your work builds on other studies published in our journal.”

Response from incoming editor Janice D. Yoder:

As outgoing editor of Psychology of Women Quarterly and as incoming editor of Sex Roles, I have not, and would not, as policy require that authors cite papers published in the journal to which they are submitting.

I have recommended, and likely will continue to recommend, papers to authors that I think may be relevant to their work, but without any requirement to cite those papers. I try to be clear that it is in this spirit of building on existing scholarship that I make these recommendations and to make the decision of whether or not to cite them up to the author. As an editor who has decision-making power, I know that my recommendations can be interpreted as requirements (or a wise path to follow for authors eager to publish) but I can say that I have not further pressured an author whose revision fails to cite a paper I recommended.

I also have referred to authors’ reference lists as a further indication that a paper’s content is not appropriate for the journal I edit. Although never the sole indicator and never based only on citations to the specific journal I edit, if a paper is framed without any reference to the existing literature across journals in the field then it is a sign to me that the authors should seek a different venue.

I value the concerns that have been raised here, and I certainly would be open to ideas to better guide my own practices.

European Sociological Review (2013)

In a decision letter notifying the author of a minor revise-and-resubmit, the editor wrote that the author had left out of the references some recent, unspecified, publications in ESR and elsewhere (also unspecified) and suggested the author update the references.

Response from editor Melinda Mills:

I welcome the debate about academic publishing in general, scrutiny of impact factors and specifically of editorial practices. Given the importance of publishing in our profession, I find it surprising how little is actually known about the ‘black box’ processes within academic journals and I applaud the push for more transparency and scrutiny in general about the review and publication process. Norms and practices in academic journals appear to be rapidly changing at the moment, with journals at the forefront of innovation taking radically different positions on editorial practices. The European Sociological Review (ESR) engages in rigorous peer review and most authors agree that it strengthens their work. But there are also new emerging models such as Sociological Science that give greater discretion to editors and focus on rapid publication. I agree with Cohen that this debate is necessary and would be beneficial to the field as a whole.

It is not a secret that the review and revision process can be a long (and winding) road, both at ESR and most sociology journals. If we go through the average timeline, it generally takes around 90 days for the first decision, followed by authors often taking up to six months to resubmit the revision. This is then often followed by a second (and sometimes third) round of reviews and revision, which in the end leaves us at ten to twelve months from original submission to acceptance. My own experience as an academic publishing on other journals is that it can regularly exceed one year. During the year under peer review and revisions, relevant articles have often been published. Surprisingly, few authors actually update their references or take into account new literature that was published after the initial submission. Perhaps this is understandable, since authors have no incentive to implement any changes that are not directly requested by reviewers.

When there has been a particularly protracted peer review process, I sometimes remind authors to update their literature review and take into account more recent publications, not only in ESR but also elsewhere. I believe that this benefits both authors, by giving them greater flexibility in revising their manuscripts, and readers, by providing them with more up-to-date articles. To be clear, it is certainly not the policy of the journal to coerce authors to self-cite ESR or any other outlets. It is vital to note that we have never rejected an article where the authors have not taken the advice or opportunity to update their references and this is not a formal policy of ESR or its Editors. If authors feel that nothing has happened in their field of research in the last year that is their own prerogative. As authors will note, with a good justification they can – and often do – refuse to make certain substantive revisions, which is a core fundament of academic freedom.

Perhaps a more crucial part of this debate is the use and prominence of journal impact factors themselves both within our discipline and how we compare to other disciplines. In many countries there is a move to use these metrics to distribute financing to Universities, increasing the stakes of these metrics. It is important to have some sort of metric gauge of the quality and impact of our publications and discipline. But we also know that different bibliometric tools have the tendency to produce different answers and that sociology fairs relatively worse in comparison to other disciplines. Conversely, leaving evaluation of research largely weighted by peer review can produce even more skewed interpretations if the peer evaluators do not represent an international view of the discipline. Metrics and internationally recognized peer reviewers would seem the most sensible mix.

Work and Occupations (2010-2011 period)

“I would like to accept your paper for publication on the condition that you address successfully reviewer X’s comments and the following:

2. The bibliography needs to be updated somewhat … . Consider citing, however critically, the following Work and Occupations articles on the italicized themes:

[concept: four W&O papers, three from the previous two years]

[concept: two W&O papers from the previous two years]

The current editor, Dan Cornfield, thanked me and chose not to respond for publication.

Sociological Forum (2014-2015 period)

I am pleased to inform you that your article … is going to press. …

In recent years, we published an article that is relevant to this essay and I would like to cite it here. I have worked it in as follows: [excerpt]

Most authors find this a helpful step as it links their work into an ongoing discourse, and thus, raises the visibility of their article.

Response from editor Karen Cerulo:

I have been editing Sociological Forum since 2007. I have processed close to 2500 submissions and have published close to 400 articles. During that time, I have never insisted that an author cite articles from our journal. However, during the production process–when an article has been accepted and I am preparing the manuscript for the publisher–I do sometimes point out to authors Sociological Forum pieces directly relevant to their article. I send authors the full citation along with a suggestion as to where the citation be discussed or noted. I also suggest changes to key words and article abstracts, My editorial board is fully aware of this strategy. We have discussed it at many of our editorial board meetings and I have received full support for this approach. I can say, unequivocally, that I do not insist that citations be added. And since the manuscripts are already accepted, there is no coercion involved. I think it is important that you note that on any blog post related to Sociological Forum

I cannot tell you how often an author sends me a cover letter with their submission telling me that Sociological Forum is the perfect journal for their research because of related ongoing dialogues in our pages. Yet, in many of these cases, the authors fail to reference the relevant dialogues via citations. Perhaps editors are most familiar with the debates and streams of thought currently unfolding in a journal. Thus, I believe it is my job as editor and my duty to both authors and the journal to suggest that authors consider making appropriate connections.

Unnamed journal (2014)

An article was desk-rejected — that is, rejected without being sent out for peer review — with only this explanation: “In light of the appropriateness of your manuscript for our journal, your manuscript has been denied publication in X.” When the author asked for more information, a journal staff member responded with possible reasons, including that the paper did not include any references to the articles in that journal. In my view the article was clearly within the subject area of the journal. I didn’t name the journal here because this wasn’t an official editor’s decision letter and the correspondence only suggested that might be the reason for the rejction.

Sociological Quarterly (2014-2015 period)

In a revise and resubmit decision letter:

Finally, as a favor to us, please take a few moments to review back issues of TSQ to make sure that you have cited any relevant previously published work from our journal. Since our ISI Impact Factor is determined by citations, we would like to make sure papers under consideration by the journal are referring to scholarship we have previously supported.

The current editors, Lisa Waldner and Betty Dobratz, have not yet responded.

Canadian Review of Sociology (2014-2015 period)

In a letter communicating acceptance conditional on minor changes, the editor asked the author to consider citing “additional Canadian Review of Sociology articles” to “help with the journal’s visibility.”

Response from current editor Rima Wilkes:

In the case you cite, the author got a fair review and received editorial comments at the final stages of correction. The request to add a few citations to the journal was not “coercive” because in no instance was it a condition of the paper either being reviewed or published.

Many authors are aware of, and make some attempt to cite the journal to which they are submitting prior to submission and specifically target those journals and to contribute to academic debate in them.

Major publications in the discipline, such as ASR, or academia more generally, such as Science, almost never publish articles that have no reference to debates in them.

Bigger journals are in the fortunate position of having authors submit articles that engage with debates in their own journal. Interestingly, the auto-citation patterns in those journals are seen as “natural” rather than “coerced”. Smaller journals are more likely to get submissions with no citations to that journal and this is the case for a large share of the articles that we receive.

Journals exist within a larger institutional structure that has certain demands. Perhaps the author who complained to you might want to reflect on what it says about their article and its potential future if they and other authors like them do not engage with their own work.

Social Science Research (2015)

At the end of a revise-and-resubmit memo, under “Comment from the Editor,” the author was asked to include “relevant citations from Social Science Research,” with none specified.

The current editor, Stephanie Moller, has not yet responded.

City & Community (2013)

In an acceptance letter, the author was asked to approve several changes made to the manuscript. One of the changes, made to make the paper more conversant with the “relevant literature,” added a sentence with several references, one or more of which were to City & Community papers not previously included.

One of the current co-editors, Sudhir Venkatesh, declined to comment because the correspondence occurred before the current editorial teams’ tenure began.

Discussion

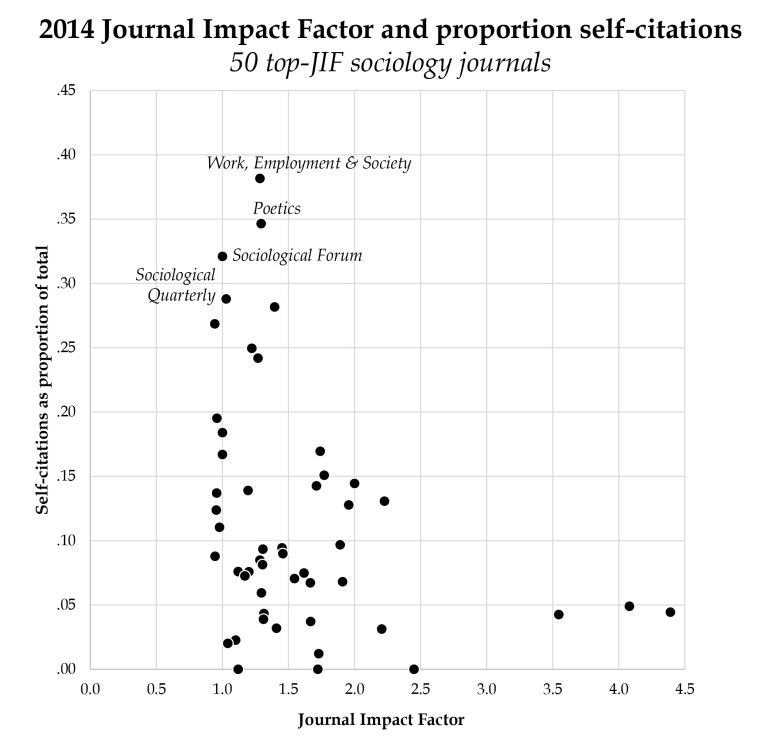

The Journal Impact Factor (JIF) is an especially dysfunctional part of our status-obsessed scholarly communication system. Self-citation is only one issue, but it’s a substantial one. I looked at 116 journals classified as sociology in 2014 by Web of Science (which produces the JIF), excluding some misplaced and non-English journals. WoS helpfully also offers a list excluding self-citations, but normal JIF rankings do not make this exclusion. (I put the list here.) On average removing self-citations reduces the JIF by 14%. But there is a lot of variation. One would expect specialty journals to have high self-citation counts because the work they publish is closely related. Thus Armed Forces and Society has a 31% self-citation rate, as does Work & Occupations (25%). But others, like Gender & Society (13%) and Journal of Marriage and Family (15%) are not high. On the other hand, you would expect high-visibility journals to have high self-citation rates, if they publish better, more important work; but on this list the correlation between JIF and self-citation rate is -.25. Here is that relationship for the top 50 journals by JIF, with the top four by self-citation labeled (the three top-JIF journals at bottom-right are American Journal of Sociology, Annual Review of Sociology, and American Sociological Review).

The top four self-citers are low-JIF journals. Two of them are mentioned above, but I have no idea what role self-citation encouragement plays in that. There are other weird distortions in JIFs that may or may not be intentional. Consider the June 2015 issue of Sociological Forum, which includes a special section, “Commemorating the Fiftieth Anniversary of the Civil Rights Laws.” That issue, just a few months old, as of yesterday includes the 9 most-cited articles that the journal published in the last two years. In fact, these 9 pieces have all been cited 9 times, all by each other — and each article currently has the designation of “Highly Cited Paper” from Web of Science (with a little trophy icon). The December 2014 issue of the same journal also gave itself an immediate 24 self-citations for a special “forum” feature. I am not suggesting the journal runs these forum discussion features to pump up its JIF, and I have nothing bad to say about their content — what’s wrong with a symposium-style feature in which the authors respond to each other’s work? But these cases illustrate what’s wrong with using citation counts to rank journals. As Martin’s piece explains, the JIF is highly susceptible to manipulation beyond self-citation promotion, for example by tinkering with the pre-publication queue of online articles, publishing editorial review essays, and of course outright fraud.

Anyway, my opinion is that journal editors should never add or request additional citations without clearly stated substantive reasons related to the content of the research and unrelated to the journal in which they are published. I realize that reasonable people disagree about this — and I encourage readers to respond in the comments below. I also hope that any editor would be willing to publicly stand by their practices, and I urge editors and journal management to let authors and readers see what they’re doing as much as possible.

However, I also think our whole journal system is pretty irreparably broken, so I put limited stock in the idea of improving its operation. My preference is to (1) fire the commercial publishers, (2) make research publication open-access with a very low bar for publication; and (3) create an organized system of post-publication review to evaluate research quality, with (4) republishing or labeling by professional associations to promote what’s most important.

* Some relevant posts cover long review delays for little benefit; the problem of very similar publications; the harm to science done by arbitrary print-page limits; gender segregation in journal hierarchies; and how easy it is to fake data.

Thank you for doing the work to bring these practices into public discussion. I wonder whether we could have an ASA panel to discuss this? It seems like the ASA could organize a regular series of sessions on consequential issues in publishing (or in the discipline more generally) on which reasonable and informed people have different views.

LikeLike

Thanks for great info on publishing process and much to chew on re ethics of gaming the system.

I think your cure is worse than the disease and targets the wrong piece of the problem (tenure and promotion committees relying on JIF) – open access = hi publication cost, no comercial publishers = fewer packaged library online access tools that make lots of journals easily available, post-publication review = another layer of unpaid labor of uneven quality, driven mostly by elite scholars with predictable biases, labeling by professional associations (and publishing by them rather than commercial publishers) = shifting the conflict of interest to them as they pump up their own importance.

Remember that the “hi jif” journals are legacies of an earlier age of status construction and had their own problems of exclusion (G&S was founded because gender theory couldn’t get published in the big 3 and ASA at that time declined to publish it because “it might divert good papers from ASR” – our professional association back then didn’t see its own biases). Excellence as a concept is contested, and newer ideas (and associations formed around them) have to struggle to be seen. The dynamics of citation are less driven by commercial publishers (who can and do package all their journals for libraries through project muse, jstor, etc) than by JIF obsessed (and lazy) tenure and promotion (and hiring and merit raise) committees.

LikeLiked by 1 person

Thanks for the eye-opening piece. I know that as an author I am saddened when asked to incorporate unrelated articles, and as an editor, I tried hard not to ask authors to cite the journal (though I would refer to authors that I genuinely thought might be helpful). I’ve thought a lot about the solutions you suggest here, and think that they open a while new can of worms. I know libraries are paying commercial publishers a lot, and authors/reviewers get relatively little. But when I was editing G&S, my publisher helped me edit in ethical and thoughtful ways, without a maniacal focus on JIF. I also think that the review process plays a crucial role in bettering scholarship, and think it would be damaging to good science to publish with a low bar and do post-publication review and sponsorship – I can see this going seriously awry. So I still believe in working with ethical publishers and through a pre-publication review system, and agree with Myra about the important of changing the JIF obsessed culture in personnel committees.

LikeLiked by 1 person

Reblogged this on Concierge Librarian.

LikeLike

This is so not good. This was the one place that I thought would remain sacred….SIGH….Here is the reason why I am an advocate of open access

LikeLiked by 1 person

I am also more than slightly depressed by current editorial practices & the monopoly Journals have on knowledge production & access. I wholeheartedly agree with the suggestions concluding your post – I’ve been thinking a lot about this lately as well! I’m an academic outside of the academy (I work in non-profit, but still have an active collaborative research agenda), and I’m not so beholden to the publish-or-perish mentality for my livelihood. I also place a huge value on interdisciplinary work that is often hard to publish. How difficult do you think it would be to pursue the suggestions you’ve made? What kind of infrastructure would be needed? I would love to join a discussion of how we might be able to move towards something like what you’ve outlined here!

LikeLiked by 1 person

Here’s a white paper describing one way to get to open access and get out from under the journals: http://knconsultants.org/toward-a-sustainable-approach-to-open-access-publishing-and-archiving/

LikeLike

Journal editors encouraging self-citation is roughly the ethical equivalent of authors citing their own publications, reviewers suggesting their publications for citation to prospective authors, or professors assigning their publications to their students. Sometimes it’s gratuitous. Sometimes it’s justified. But I suspect it’s rarely scandalous. On the academic ethics scandal barometer, I give it 2 yawns.

LikeLike

The central problem is a lack of any oversight at all over journal editors. Editors can coerce self-citations. They can ignore their duties and let graduate assistants run journals. They can abandon any independent judgment and simply count the votes from reviews to make decisions. They can use deflections to protect their ideological beliefs through selective publication. They can outright fake reviews. They can use multiple rounds of R&Rs, and reject papers for ridiculous reasons after many sets of revisions. They can publish papers they like without review. They can publish their own papers without real review (e.g., assigning someone from their handpicked editorial board to serve as the editor on their paper).

If Retraction Watch has taught us anything, it is that scientists cannot be trusted to do the right thing by themselves. The very simple solution is to have an independent board in each discipline where appeals of editorial decisions and general concerns about the ethics of specific editors can be sent. If editors were accountable to independent scholars, and they knew they might be called on to defend their decisions and practices publicly, they wold make better decisions and avoid ethically questionable behaviors.

LikeLiked by 1 person

I think James Wright now retired, former Editor of Social Science Research absolutely rushed through the Regenrus paper because he thought it would get a lot of citations and build up the impact factor of his Journal.

LikeLike